Introduction

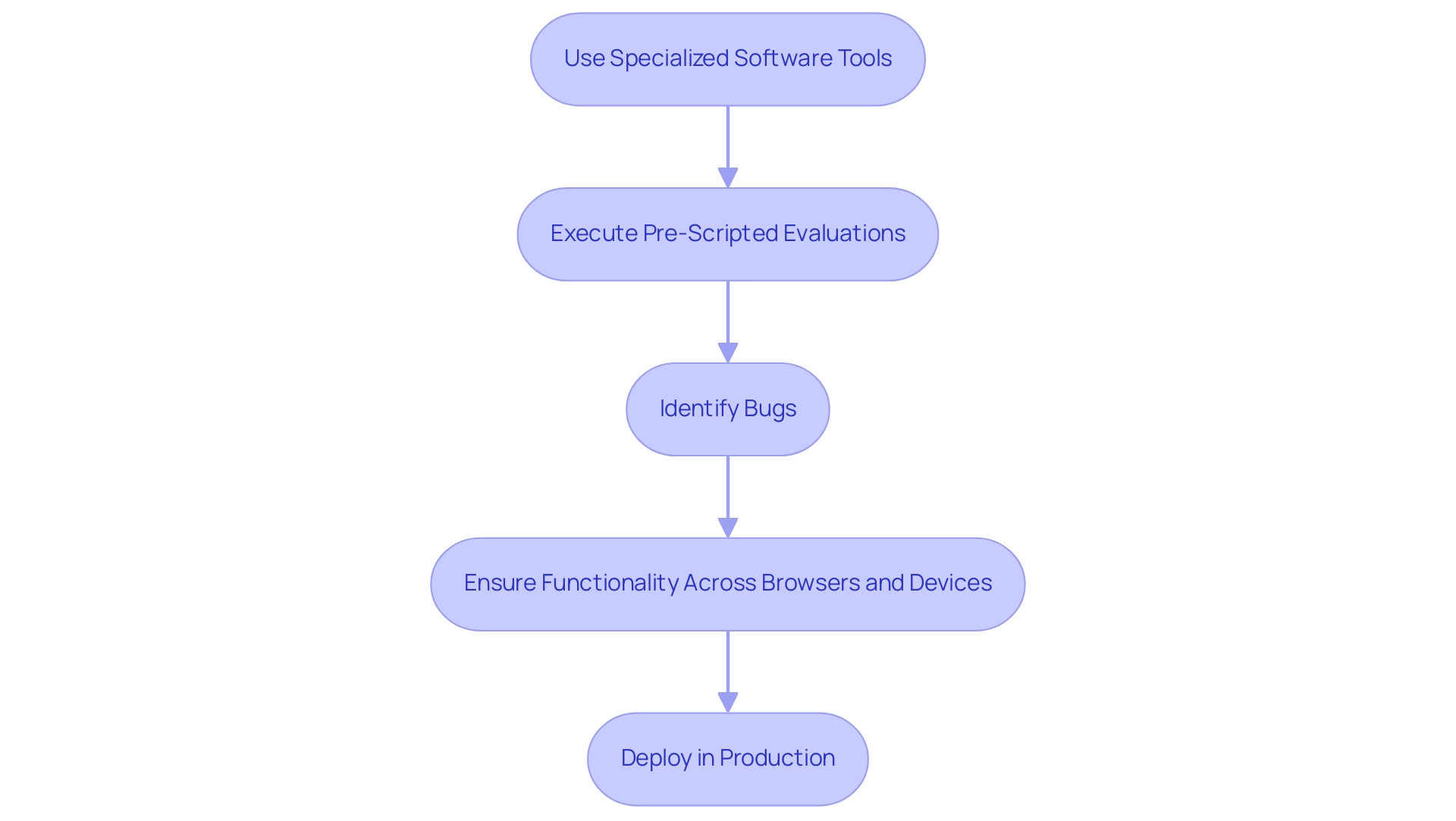

In the rapidly evolving landscape of web application development, the importance of test automation cannot be overstated. As applications grow in complexity, ensuring their reliability and performance becomes a paramount concern for developers and stakeholders alike. Test automation emerges as a powerful solution, employing specialized tools to execute pre-scripted tests that identify bugs and validate functionality across diverse environments.

This article delves into the fundamental principles of test automation, the various types of automated tests, and the challenges organizations face in implementation. Furthermore, it highlights best practices that can elevate automation efforts, ultimately driving efficiency and quality in software delivery. Understanding these elements is crucial for any team looking to navigate the intricate world of automated testing successfully.

Understanding Test Automation for Web Applications

The process of testing for online platforms involves the use of specialized software tools to execute pre-scripted evaluations on a web system prior to its deployment in production. This process aids in identifying bugs and ensures that the software functions as intended across various browsers and devices. In contrast to manual evaluation, which can be time-intensive and susceptible to human mistakes, automated processes enable quick and consistent assessments, greatly enhancing efficiency and precision in the development lifecycle. As online platforms grow more intricate, the necessity for efficient automation of evaluations has never been more vital.

Fundamentals of Test Automation: Key Principles

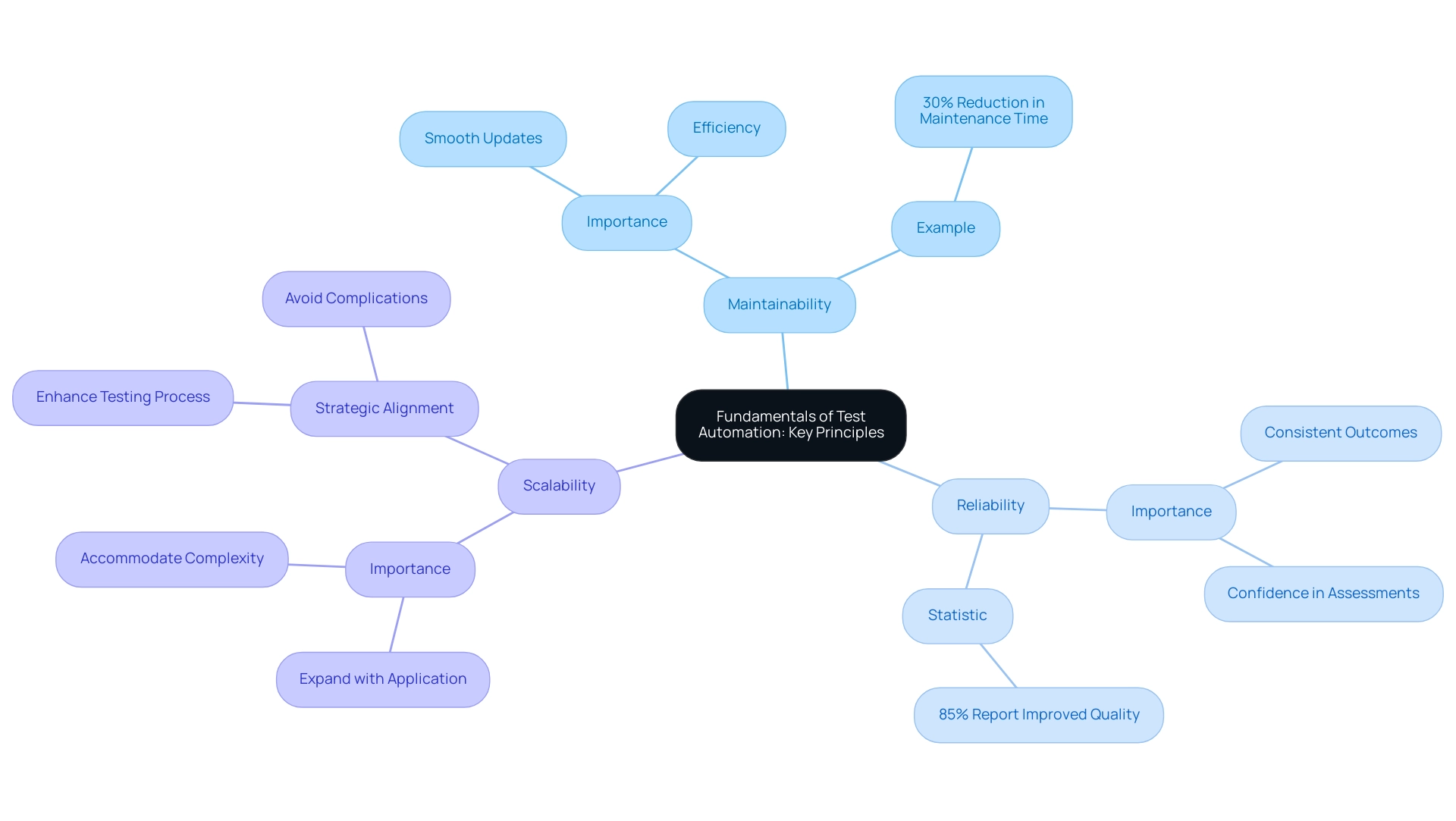

The key principles of automation—maintainability, reliability, and scalability—are essential for the success of any automated evaluation initiative. Maintainability enables smooth updates to automated evaluations as software progresses, ensuring that assessments remain pertinent and efficient. For instance, a case study from a leading e-commerce platform demonstrated that implementing maintainability best practices reduced their maintenance time by 30%, allowing for quicker adaptations to their application updates.

Reliability is essential, as it ensures that evaluations consistently produce precise outcomes, fostering confidence in the assessment process. According to recent statistics, 85% of organizations that prioritize reliability in their test automation report improved product quality in 2024.

Scalability ensures that the framework can expand in tandem with the application, accommodating increased complexity and volume without compromising performance. To achieve these objectives, it is crucial to establish a clear evaluation strategy that aligns with overarching development goals. This strategic alignment ensures that mechanization enhances the testing process rather than introducing complications.

As mentioned by Geosley Andrades, a Quality Assurance Advocate at ACCELQ,

Being enthusiastic about ongoing education, we strive to make process management simpler, more dependable, and sustainable for the real world.

By adhering to these principles—maintainability, reliability, and scalability—organizations can build a robust automation framework that not only meets current demands but also adapts to future challenges.

Exploring Different Types of Automated Tests

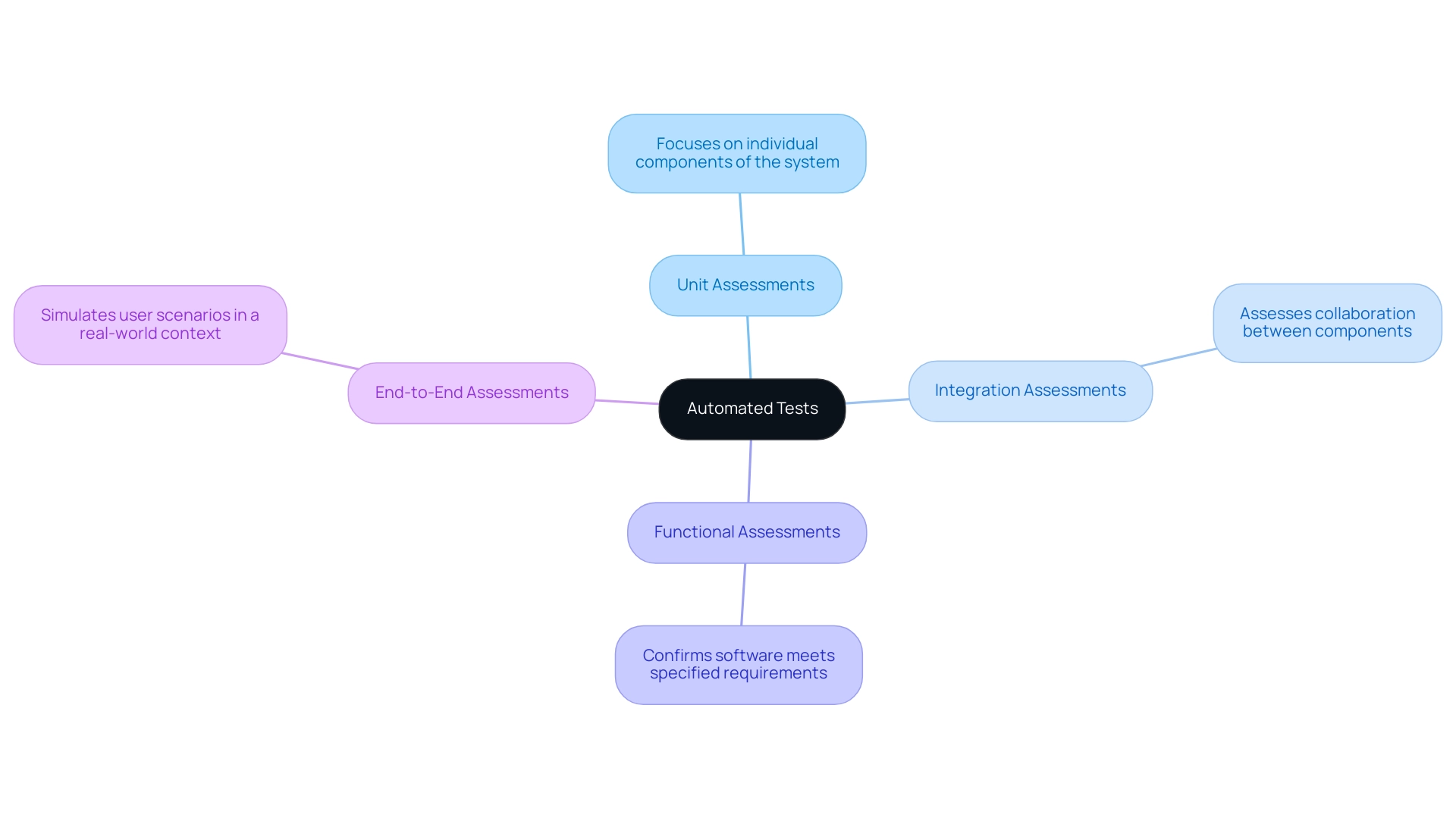

Automated evaluations can be classified into several types, including:

- Unit assessments

- Integration assessments

- Functional assessments

- End-to-end assessments

Unit evaluations concentrate on separate elements of the system to guarantee that each section operates properly. Integration evaluations assess how various components collaborate, while functional assessments confirm that the software fulfills specified requirements. End-to-end evaluations simulate user scenarios to assess the software in a real-world context. Understanding these categories allows teams to implement a comprehensive testing strategy that addresses various aspects of the application.

Challenges in Implementing Test Automation

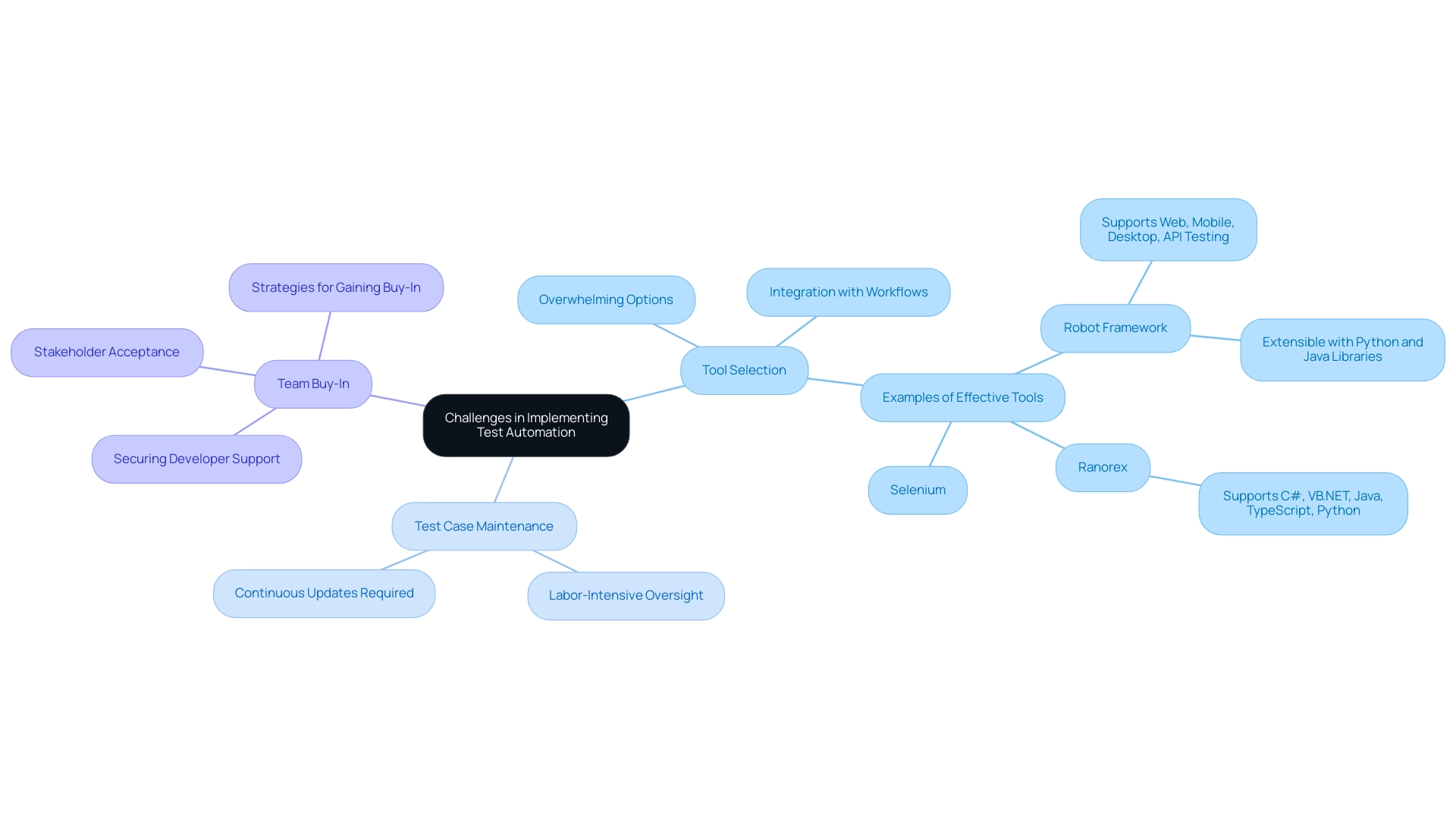

The execution of examination mechanization is filled with obstacles that can impede its efficiency. Recent statistics indicate that over 60% of organizations struggle with choosing the appropriate automation tools, resulting in delays and higher expenses. Among the most pressing issues are:

- The selection of the right tools

- The management of test case maintenance

- The necessity of securing team buy-in

With a plethora of options available, choosing the suitable framework for evaluation can quickly become overwhelming. It's essential to remember that, as highlighted by industry expert Jonny Steiner,

the ideal tool seamlessly integrates with your existing workflows and empowers your team to deliver high-quality software more confidently.

A notable example is the Robot Framework, an open-source framework that supports web, mobile, desktop, and API testing using a keyword-driven approach. This framework not only tackles the challenges of assessment processes but also permits extensibility through libraries in Python and Java.

Furthermore, as applications evolve, maintaining test cases transforms into a labor-intensive task requiring continuous oversight. Ultimately, gaining acceptance from both developers and stakeholders is essential; without their backing, initiatives may encounter considerable challenges. Identifying these challenges early enables teams to create effective strategies to address them, paving the way for smoother processes and ultimately improving the overall quality of software delivery.

Additionally, tools like Ranorex and Selenium have shown high adoption rates among organizations, demonstrating their effectiveness in various testing scenarios.

Best Practices for Effective Test Automation

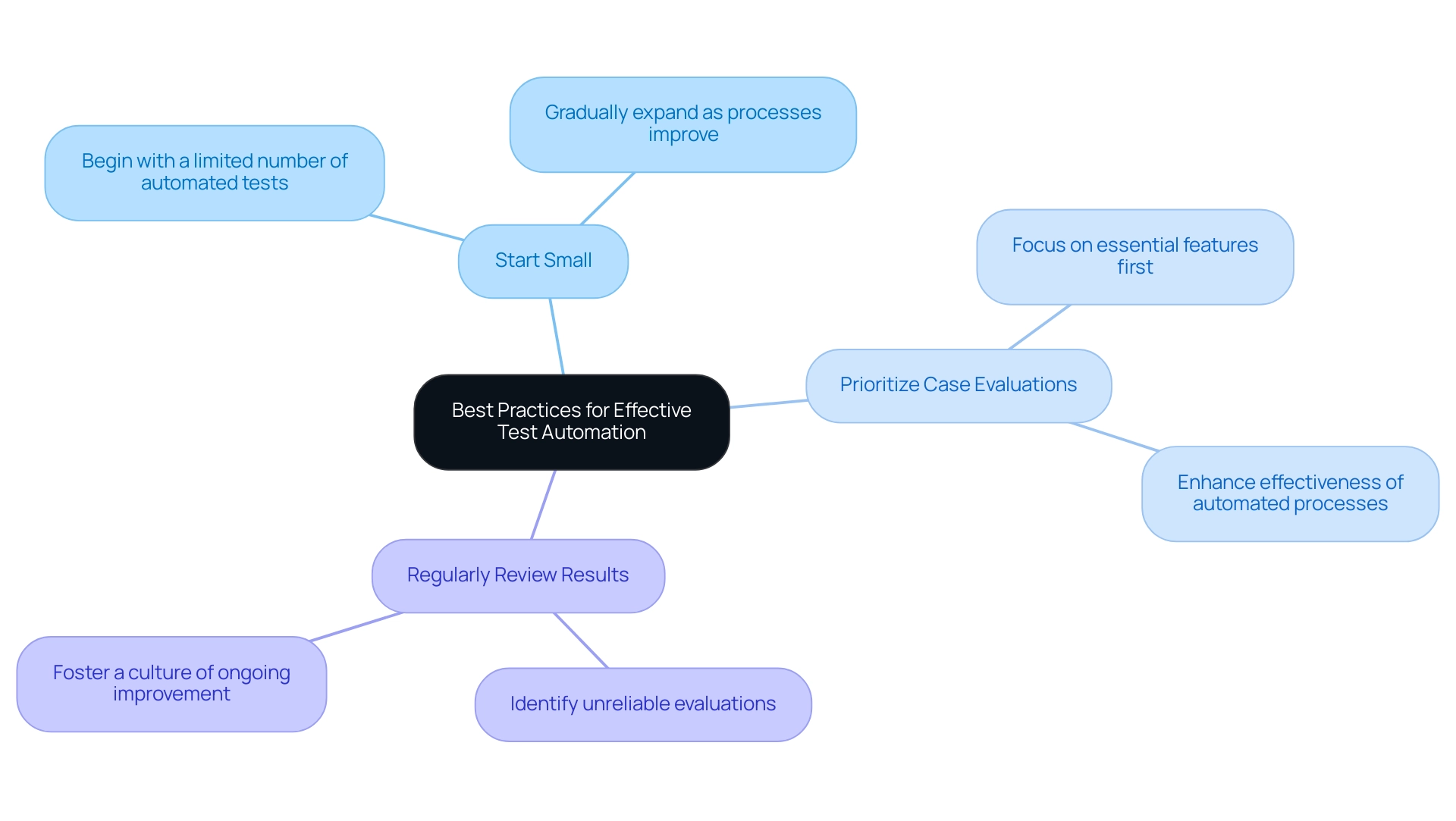

To accomplish efficient assessment mechanization, teams should embrace several best practices, including:

- Starting small

- Prioritizing case evaluations

- Regularly reviewing results

Beginning with a restricted quantity of automated evaluations enables teams to enhance their processes prior to expanding. Focusing on case selection guarantees that the most essential features are evaluated first, enhancing the effectiveness of automated processes. Consistently examining assessment outcomes aids in recognizing unreliable evaluations and areas for improvement, fostering a culture of ongoing enhancement. By following these best practices, teams can optimize their test automation efforts and drive better outcomes.

Conclusion

In the realm of web application development, the significance of test automation cannot be underestimated. By leveraging specialized tools to conduct pre-scripted tests, organizations can effectively identify bugs and validate functionality across diverse environments. This approach not only enhances efficiency but also mitigates the risks associated with manual testing, which is often time-consuming and susceptible to human error.

Central to successful test automation are the principles of maintainability, reliability, and scalability. These principles ensure that automated tests can adapt to the evolving landscape of applications, consistently deliver accurate results, and expand alongside the application’s complexity. By adhering to these foundational elements, organizations can establish a robust testing framework that aligns with their development goals.

However, the journey to effective test automation is not without its challenges. From selecting the right tools to managing test case maintenance and securing stakeholder buy-in, organizations must navigate a myriad of obstacles. Recognizing these challenges early on and implementing best practices—such as starting small and prioritizing critical test cases—can significantly enhance the automation process.

Ultimately, mastering test automation is essential for any development team aiming to deliver high-quality software efficiently. By embracing the key principles and addressing the inherent challenges, organizations can not only improve their testing processes but also drive greater overall success in software delivery. The path to effective test automation is a journey worth undertaking, as it paves the way for greater reliability, improved performance, and a competitive edge in an increasingly complex digital landscape.